| REPORT ATTRIBUTE |

DETAILS |

| Historical Period |

2020-2023 |

| Base Year |

2024 |

| Forecast Period |

2025-2032 |

| Generative AI ASIC Market Size 2024 |

USD 249.68 billion |

| Generative AI ASIC Market, CAGR |

28.60% |

| Generative AI ASIC Market Size 2032 |

USD 1,867.72 billion |

Market Overview

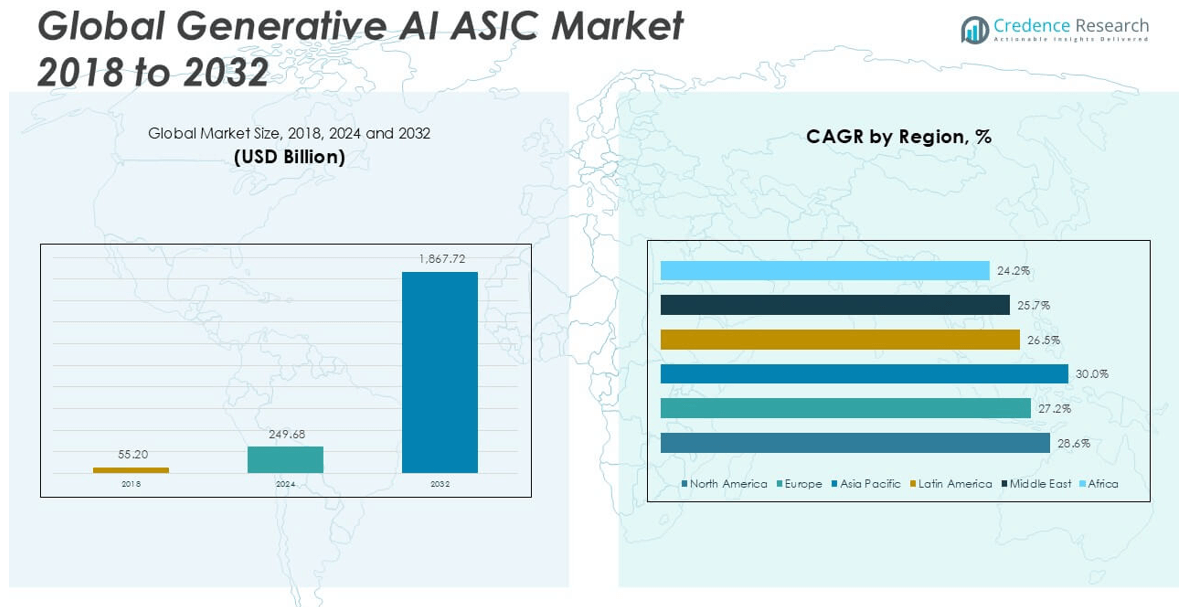

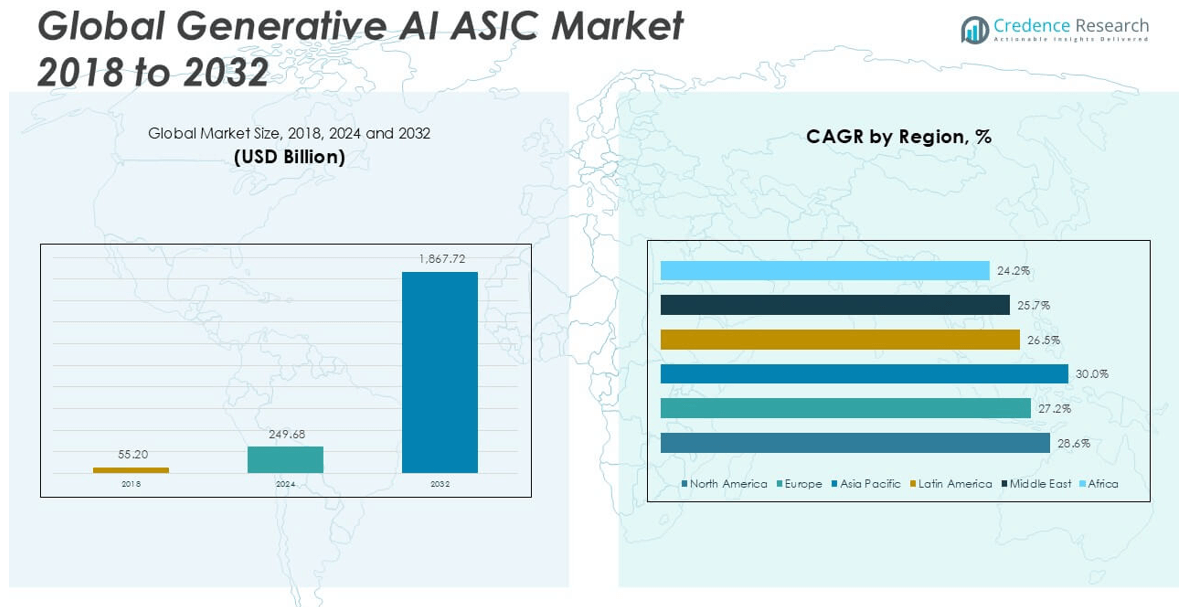

The Generative AI ASIC market size was valued at USD 55.20 billion in 2018, increased to USD 249.68 billion in 2024, and is anticipated to reach USD 1,867.72 billion by 2032, at a CAGR of 28.60% during the forecast period.

The Generative AI ASIC market is highly competitive, with key players including NVIDIA, AMD, Intel, Google (TPU), Amazon Web Services, Broadcom, Marvell Technology, Tenstorrent, SambaNova Systems, and Cerebras Systems driving technological advancements. These companies are focusing on chip optimization for high-speed training and inference tasks, custom AI architectures, and integration with cloud and data center infrastructures. North America emerged as the dominant region, accounting for 42.0% of the global market share in 2024, supported by strong R&D investment, advanced semiconductor manufacturing, and the presence of leading AI infrastructure providers.

Market Insights

- The Generative AI ASIC market was valued at USD 249.68 billion in 2024 and is projected to reach USD 1,867.72 billion by 2032, growing at a CAGR of 28.60% during the forecast period.

- The market is driven by the rising demand for high-performance, energy-efficient chips tailored for training and inference in large-scale generative AI models across industries.

- Key trends include the development of custom ASICs by tech giants, increasing adoption of hybrid chips for edge AI, and growing deployment in healthcare, automotive, and enterprise sectors.

- The competitive landscape features major players such as NVIDIA, AMD, Intel, Google, AWS, and Cerebras Systems, with NVIDIA and Google holding strong positions through advanced chip portfolios and cloud integration.

- North America leads the market with a 42.0% share, followed by Asia Pacific at 30.3%, while the Training ASICs segment dominates by chip type due to higher demand in model development.

Access crucial information at unmatched prices!

Request your sample report today & start making informed decisions powered by Credence Research Inc.!

Download Sample

Market Segmentation Analysis:

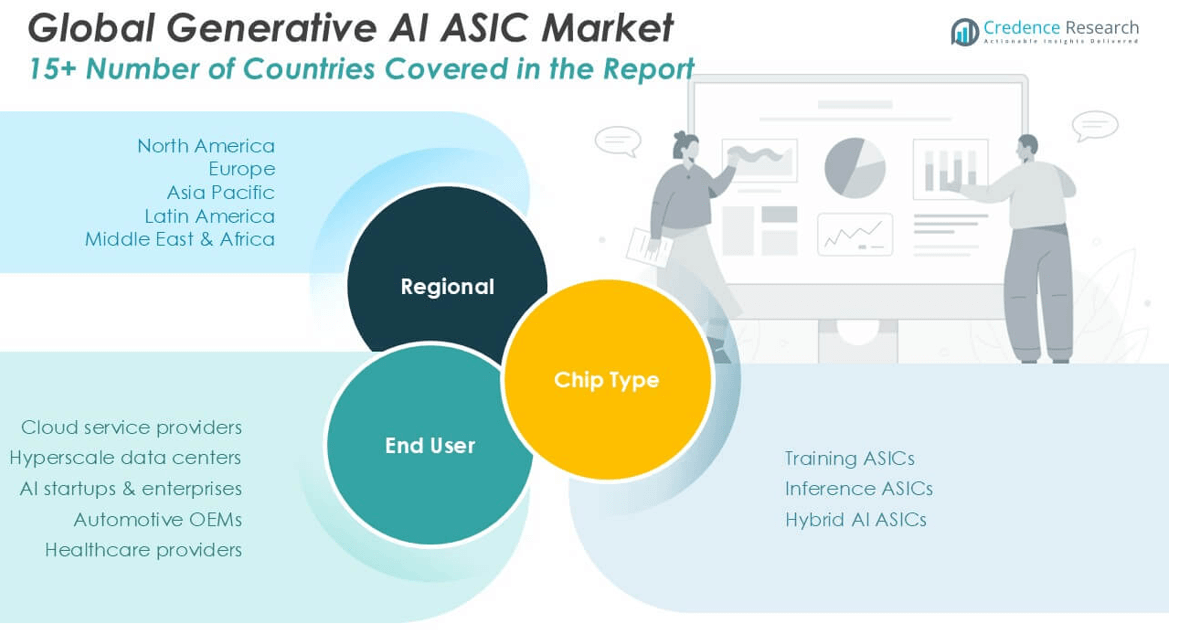

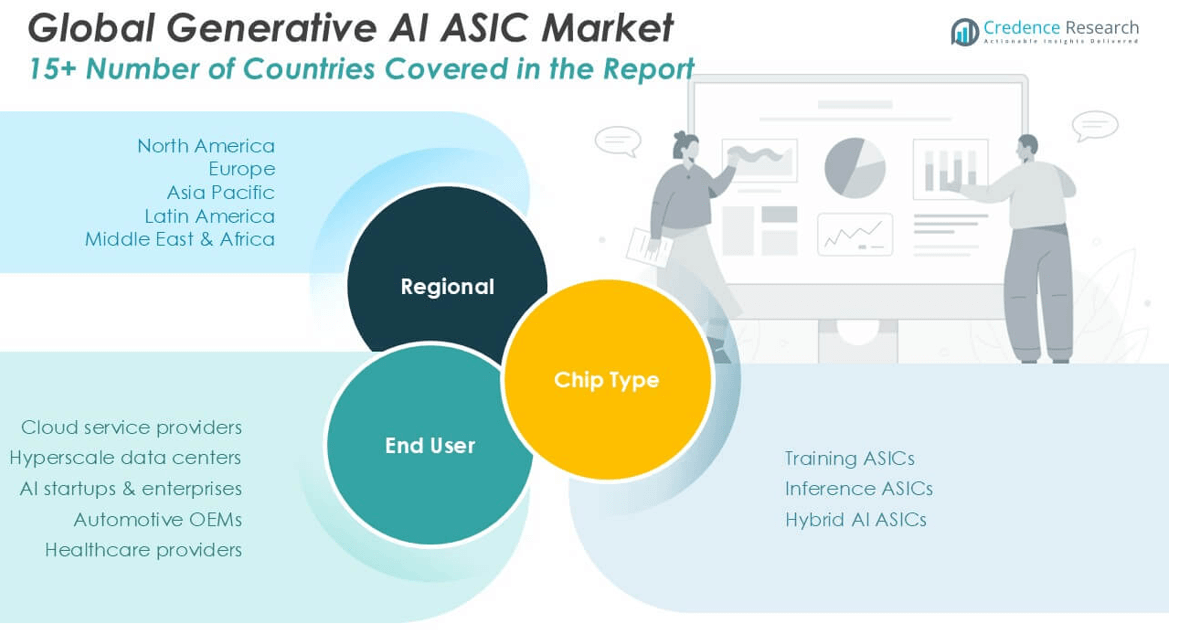

By Chip Type

The Training ASICs segment dominated the Generative AI ASIC market, accounting for the largest revenue share in 2024. This dominance stems from the high computational demand associated with training large generative AI models, including LLMs and diffusion models, which require optimized and energy-efficient hardware. Major tech companies are investing heavily in developing custom training chips to reduce reliance on third-party GPUs and improve performance. Meanwhile, Inference ASICs are gaining traction due to the growing deployment of AI models in real-time applications across industries, including customer service and content generation. Hybrid AI ASICs are emerging as a flexible solution, combining training and inference capabilities to cater to versatile workloads, especially in edge AI and mobile deployments.

- For instance, NVIDIA’s Jetson Orin Nano module supports up to 40 trillion operations per second (TOPS), enabling hybrid AI workloads in edge devices with compact energy profiles.

By End User

Among end-user segments, Cloud service providers held the largest market share in 2024, driven by their scale of operations and growing demand for AI infrastructure to support generative AI applications across industries. These providers are investing significantly in custom AI silicon to lower latency and operational costs. Hyperscale data centers follow closely, benefiting from the rapid expansion of AI workloads and need for optimized processing capabilities. AI startups & enterprises represent a fast-growing segment, adopting ASICs to accelerate innovation and gain competitive advantage. Automotive OEMs are increasingly integrating AI ASICs for autonomous driving features, while Healthcare providers utilize these chips to enhance diagnostics, imaging, and patient data analysis.

- For instance, Tesla’s FSD chip, used in its vehicles for autonomous driving, processes 36 trillion operations per second (TOPS), enabling real-time generative decision-making on the road.

Market Overview

Rising Demand for High-Performance AI Hardware

The increasing adoption of generative AI models in diverse applications—from content creation and drug discovery to autonomous systems—has accelerated the need for high-performance, energy-efficient hardware. ASICs tailored for generative AI deliver faster computation, lower latency, and reduced power consumption compared to traditional GPUs. Enterprises and cloud service providers are investing heavily in these specialized chips to enhance AI capabilities, reduce infrastructure costs, and support large-scale deployments. This rising demand for dedicated AI hardware solutions is a primary driver of growth in the generative AI ASIC market.

- For instance, Cerebras Systems’ Wafer-Scale Engine 2 (WSE-2) consists of 850,000 AI-optimized cores on a single chip, making it one of the most powerful processors for training large language models like GPT-class architectures.

Expansion of Cloud and Data Center Infrastructure

Cloud service providers and hyperscale data centers are rapidly scaling their infrastructure to meet the growing computational demands of generative AI applications. The integration of ASICs into cloud-based AI platforms enhances processing speed and efficiency, offering users scalable and cost-effective AI services. As businesses transition to AI-driven solutions, demand for cloud-based inference and training capabilities has surged. This trend is pushing providers to invest in custom ASICs, boosting market growth while supporting next-generation AI workloads with lower operational and energy costs.

- For instance, Microsoft’s Azure Maia 100 AI Accelerator is optimized for large model training and inference, offering 512 MB on-chip SRAM and supports 8-bit floating point (FP8) operations, improving efficiency and memory utilization in hyperscale AI environments.

Proliferation of AI Startups and Enterprise Adoption

The global rise of AI-focused startups and growing enterprise adoption of generative AI technologies are fueling demand for ASICs. Startups are leveraging these chips to develop cutting-edge applications in sectors like media, design, and healthcare. Simultaneously, established enterprises are deploying generative AI to optimize operations, personalize customer experiences, and innovate products. As cost efficiency and performance become critical, purpose-built ASICs are increasingly favored over general-purpose processors. This widespread interest in deploying scalable and efficient AI systems is significantly contributing to the market’s expansion.

Key Trends & Opportunities

Emergence of Edge AI Applications

Edge computing is emerging as a significant trend, driving demand for compact, low-power ASICs capable of performing generative AI tasks on-device. Applications in mobile devices, automotive systems, and IoT environments are benefiting from real-time AI processing without relying on cloud infrastructure. This shift offers opportunities for ASIC manufacturers to develop hybrid chips that balance performance with energy efficiency. The decentralization of AI workloads is also helping reduce latency, improve privacy, and support new use cases across smart consumer electronics and industrial automation.

- For instance, Apple’s A17 Pro chip, integrated into iPhone 15 Pro, delivers 35 trillion operations per second (TOPS) for on-device AI, enabling generative capabilities like image editing and real-time language translation.

Custom ASIC Development by Tech Giants

Leading technology companies are increasingly designing their own AI ASICs to gain hardware optimization and competitive advantage. Firms such as Google, Amazon, and Meta are investing in proprietary chips to improve control over their AI infrastructure, reduce dependency on third-party vendors, and tailor performance to specific workloads. This trend is reshaping the competitive landscape and opening opportunities for partnerships between chip manufacturers and enterprise AI developers. The ability to deliver application-specific performance enhancements is expected to further boost demand for custom ASIC solutions.

- For instance, Meta’s MTIA v1 accelerator achieves 102.4 trillion operations per second (TOPS) at INT8 precision, enhancing recommendation systems and generative AI tasks across its platforms.

Key Challenges

High Development and Fabrication Costs

One of the major challenges in the generative AI ASIC market is the high cost associated with design, development, and fabrication. ASIC development requires significant upfront investment, specialized engineering expertise, and long development cycles. These barriers often limit entry to large corporations or well-funded startups, restricting innovation from smaller players. Additionally, changes in AI model architecture can render ASICs obsolete, creating financial risks. Balancing performance optimization with cost-efficiency remains a critical hurdle for both developers and end users.

Rapidly Evolving AI Model Complexity

The fast-paced evolution of generative AI models—such as transformers, diffusion models, and multimodal architectures—poses a challenge to ASIC designers. ASICs are highly specialized and lack the flexibility of GPUs or FPGAs to adapt to shifting workloads. As new models require different computational patterns or memory access strategies, previously optimized chips may struggle to maintain compatibility or performance. This technological rigidity may result in reduced ROI for chip developers and hinder their ability to support future advancements in generative AI.

Supply Chain Constraints and Geopolitical Risks

The ASIC supply chain is susceptible to disruptions caused by global semiconductor shortages, export regulations, and geopolitical tensions. Limited access to advanced fabrication facilities, particularly those concentrated in specific regions, can delay production and increase costs. Moreover, export controls on AI hardware imposed by major economies may restrict market access or hinder partnerships across borders. These factors collectively create uncertainty in the supply chain and may limit the scalability and timely deployment of generative AI ASIC solutions worldwide.

Regional Analysis

North America

North America held the largest share of the global generative AI ASIC market in 2024, accounting for approximately 42.0% of the total market, with a valuation of USD 104.79 billion, up from USD 23.42 billion in 2018. The region is projected to reach USD 786.12 billion by 2032, growing at a CAGR of 28.6%. This growth is driven by the presence of major AI technology companies, robust investment in AI R&D, and rapid adoption of ASICs in cloud infrastructure and hyperscale data centers. The U.S. leads this growth with innovation in custom chip development and AI integration across sectors.

Europe

Europe captured around 19.5% of the global market in 2024, reaching USD 48.73 billion from USD 11.34 billion in 2018. The market is forecast to expand to USD 334.65 billion by 2032 at a CAGR of 27.2%. Key drivers include strong regulatory support for AI adoption, advancements in AI-related academic research, and increased investment in digital transformation by EU nations. Germany, the UK, and France are leading the regional market, particularly in healthcare and automotive applications that leverage generative AI with dedicated ASIC hardware.

Asia Pacific

Asia Pacific represented approximately 30.3% of the global generative AI ASIC market in 2024, valued at USD 75.80 billion, rising from USD 15.87 billion in 2018. The region is projected to reach USD 618.64 billion by 2032, exhibiting the highest CAGR of 30.0%. This growth is fueled by large-scale AI investments in China, Japan, South Korea, and India, along with government-led initiatives promoting AI innovation. The region’s strong semiconductor manufacturing capabilities and growing AI startup ecosystem significantly boost ASIC deployment in enterprise and consumer applications.

Latin America

Latin America accounted for nearly 4.3% of the global market in 2024, with a valuation of USD 10.71 billion, up from USD 2.40 billion in 2018. The market is anticipated to grow to USD 70.14 billion by 2032 at a CAGR of 26.5%. Increasing digital transformation efforts, particularly in Brazil and Mexico, and rising demand for AI-driven analytics in financial services and retail are contributing to market expansion. While the region is still developing its semiconductor infrastructure, cloud-based AI solutions using generative AI ASICs are gaining traction.

Middle East

Middle East held around 2.4% of the global market share in 2024, reaching USD 6.00 billion, rising from USD 1.46 billion in 2018. The market is expected to grow to USD 37.44 billion by 2032, recording a CAGR of 25.7%. Countries like the UAE and Saudi Arabia are heavily investing in AI to diversify their economies and develop smart infrastructure. These efforts, combined with the establishment of AI-focused data centers, are boosting the adoption of generative AI ASICs in sectors such as finance, healthcare, and government.

Africa

Africa captured the smallest share of the global market in 2024, approximately 1.5%, with a market size of USD 3.64 billion, growing from USD 0.72 billion in 2018. The market is forecast to reach USD 20.75 billion by 2032, at a CAGR of 24.2%. Although infrastructure limitations remain a challenge, the region is witnessing gradual adoption of AI technologies, particularly in education, agriculture, and public health. South Africa, Nigeria, and Kenya are emerging as key markets where government and private sector collaborations are paving the way for AI integration supported by ASIC technology.

Market Segmentations:

By Chip Type

- Training ASICs

- Inference ASICs

- Hybrid AI ASICs

By End User

- Cloud service providers

- Hyperscale data centers

- AI startups & enterprises

- Automotive OEMs

- Healthcare providers

By Geography

- North America

- Europe

- Germany

- France

- U.K.

- Italy

- Spain

- Rest of Europe

- Asia Pacific

- China

- Japan

- India

- South Korea

- South-east Asia

- Rest of Asia Pacific

- Latin America

- Brazil

- Argentina

- Rest of Latin America

- Middle East & Africa

- GCC Countries

- South Africa

- Rest of the Middle East and Africa

Competitive Landscape

The competitive landscape of the generative AI ASIC market is characterized by intense innovation, strategic partnerships, and growing investment in custom chip development. Leading players such as NVIDIA, AMD, and Intel dominate with robust chip portfolios and established market presence, focusing on high-performance training and inference solutions. Google and Amazon Web Services are leveraging in-house ASICs like TPUs and Trainium chips to optimize AI workloads within their cloud ecosystems. Meanwhile, specialized companies like Cerebras Systems, Tenstorrent, and SambaNova Systems are driving innovation through next-generation AI architectures tailored for generative tasks. Startups and fabless semiconductor firms are increasingly entering the market with niche, application-specific offerings. Players are prioritizing advancements in processing speed, power efficiency, and integration capabilities to stay competitive. Strategic acquisitions, collaborations with hyperscale data centers, and expansion into edge AI solutions are also shaping the competitive dynamics, as companies aim to gain technological superiority and market share in this rapidly evolving space.

Shape Your Report to Specific Countries or Regions & Enjoy 30% Off!

Key Player Analysis

- NVIDIA

- AMD

- Intel

- Google (TPU)

- Amazon Web Services

- Broadcom

- Marvell Technology

- Tenstorrent

- SambaNova Systems

- Cerebras Systems

Recent Developments

- In June 2025, The AMD Instinct MI325X, based on the CDNA 3 architecture, is AMD’s latest generative AI accelerator. It features 256 GB HBM3e, delivers up to 1,307.4 BF16/FP16 TFLOPS, and targets large-scale generative AI (LLMs, multimodal) with claims of up to 1.3x performance versus NVIDIA’s H200.

- In May 2025, Cerebras Systems unveiled the Wafer-Scale Engine 3 (WSE-3), a third-generation AI processor boasting 900,000 cores on a single wafer. This chip is designed for ultra-low-latency deployments in real-time AI applications, offering significant performance improvements over existing solutions. .

- In January 2025, Tenstorrent announced the Blackhole AI chip, featuring a RISC-V multi-core architecture, aiming to provide a flexible and efficient alternative to traditional GPUs for AI workloads. Blackhole is designed to match the performance of Nvidia’s A100 and includes the Metalium toolchain for simplified AI software development. The chip boasts more compute units, increased on-chip SRAM, and enhanced bandwidth to handle demanding generative model inference and training tasks. Tenstorrent emphasizes the chip’s flexibility and lower power consumption compared to dominant GPU solutions.

- In January 2025, Unveiled NVIDIA Cosmos and the GeForce RTX 50 Series GPUs at CES 2025. The GeForce RTX 50 Series, built on the Blackwell architecture, supports innovative FP4 computing for up to 2x AI inference performance boost, optimized for generative AI workloads. NVIDIA also announced Project DIGITS, their most compact and powerful developer-targeted AI supercomputer, launching May 2025 and powered by the new GB10 Grace Blackwell Superchip.

Market Concentration & Characteristics

The Generative AI ASIC market exhibits high market concentration, with a few dominant players controlling a significant share of global revenue. Companies such as NVIDIA, AMD, Intel, Google, and Amazon Web Services lead the market due to their strong R&D capabilities, advanced fabrication technologies, and extensive integration within cloud and data center ecosystems. It is characterized by rapid innovation cycles, capital-intensive development, and high entry barriers linked to design complexity and manufacturing costs. The market favors vertically integrated firms that can optimize performance across hardware and software stacks. It continues to evolve with increasing demand for low-latency, energy-efficient processing across training and inference tasks. Strategic partnerships between chipmakers and cloud service providers play a critical role in accelerating deployment and scaling AI applications. The demand for customized, high-performance ASICs tailored for generative models is driving consolidation among key players and encouraging investment in proprietary architectures. Emerging players focus on niche use cases and edge AI to differentiate.

Report Coverage

The research report offers an in-depth analysis based on Chip Type, End User and Geography. It details leading market players, providing an overview of their business, product offerings, investments, revenue streams, and key applications. Additionally, the report includes insights into the competitive environment, SWOT analysis, current market trends, as well as the primary drivers and constraints. Furthermore, it discusses various factors that have driven market expansion in recent years. The report also explores market dynamics, regulatory scenarios, and technological advancements that are shaping the industry. It assesses the impact of external factors and global economic changes on market growth. Lastly, it provides strategic recommendations for new entrants and established companies to navigate the complexities of the market.

Future Outlook

- The market will experience strong growth driven by rising adoption of generative AI across industries.

- Demand for custom ASICs will increase as enterprises seek optimized performance and energy efficiency.

- Cloud service providers will continue to dominate deployments with in-house AI chip development.

- Asia Pacific will expand rapidly due to strong semiconductor manufacturing and government support.

- Hybrid AI ASICs will gain traction for supporting both training and inference on edge devices.

- Automotive and healthcare sectors will emerge as key end users of generative AI hardware.

- Leading players will focus on expanding chip design capabilities through acquisitions and R&D investment.

- New entrants will target niche markets with application-specific generative AI solutions.

- Partnerships between chipmakers and AI software firms will intensify to deliver integrated solutions.

- Scalability, low latency, and power efficiency will remain core design priorities for future ASICs.