Market Overview

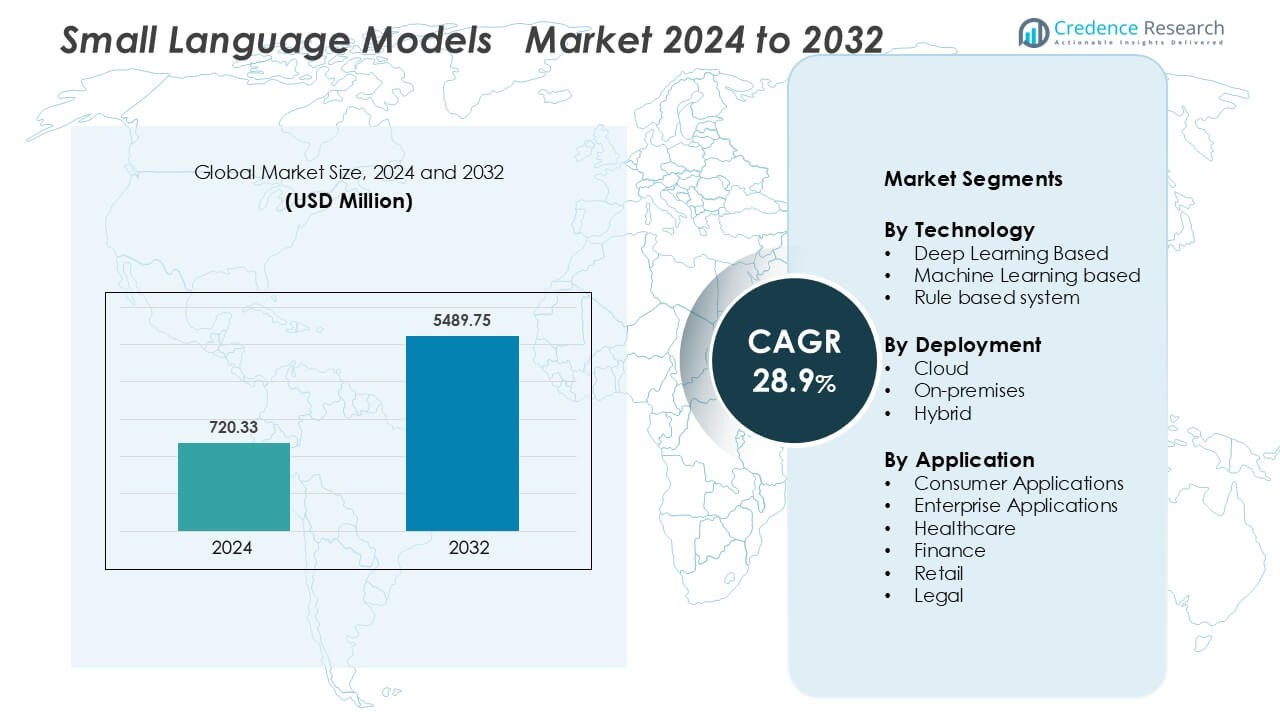

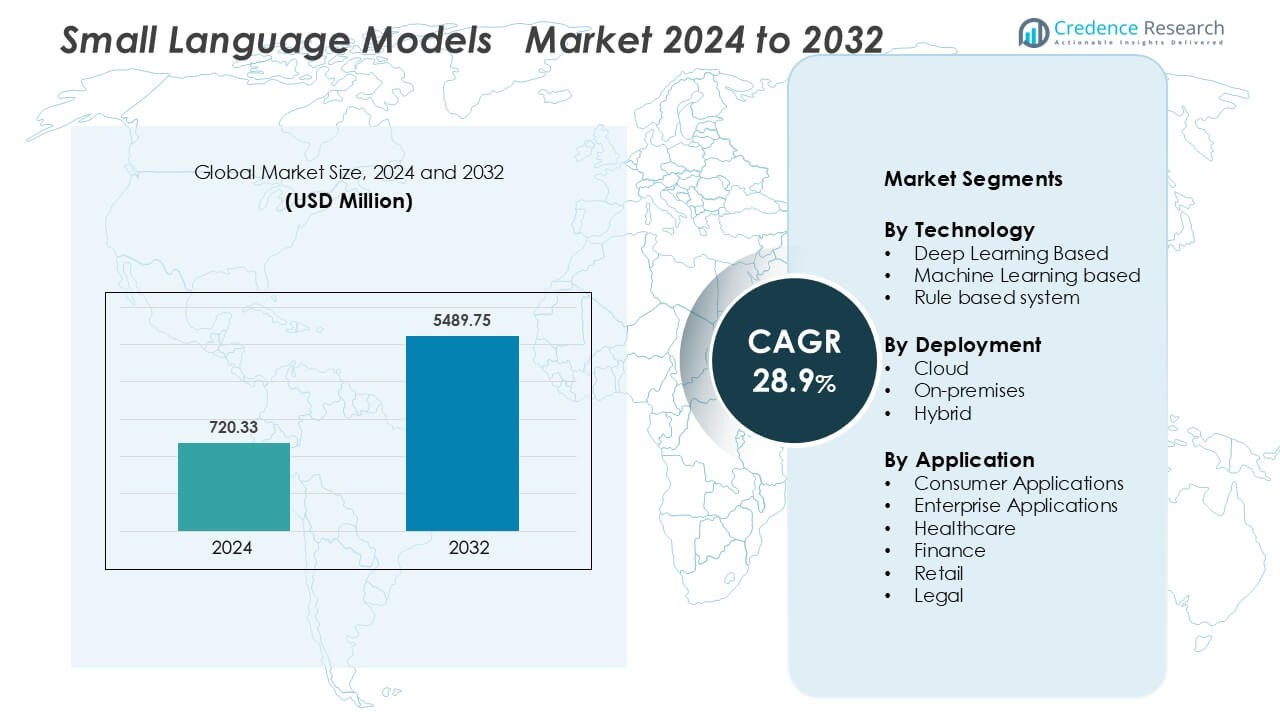

Small Language Models Market was valued at USD 720.33 million in 2024 and is anticipated to reach USD 5489.75 million by 2032, growing at a CAGR of 28.9 % during the forecast period.

| REPORT ATTRIBUTE |

DETAILS |

| Historical Period |

2020-2023 |

| Base Year |

2024 |

| Forecast Period |

2025-2032 |

| Small Language Models Market Size 2024 |

USD 720.33 Million |

| Small Language Models Market, CAGR |

28.9 % |

| Small Language Models Market Size 2032 |

USD 5489.75 Million |

The Small Language Models (SLM) market is highly competitive, with leading players such as Meta, Databricks, IBM Watson AI, Amazon AWS AI, Cohere, Nvidia, Microsoft, Cerebras Systems, Apple AI, and Google driving innovation through model efficiency, scalability, and domain specialization. These companies focus on optimizing smaller architectures for on-device processing, cloud integration, and enterprise deployment. North America dominates the global market, accounting for approximately 48% of total market share, supported by advanced AI infrastructure, strong investment in R&D, and widespread enterprise adoption. Strategic collaborations, edge computing advancements, and open AI ecosystems continue to shape competitive differentiation and future growth trajectories in this evolving landscape.

Access crucial information at unmatched prices!

Request your sample report today & start making informed decisions powered by Credence Research Inc.!

Download Sample

Market Insights

- The Small Language Models (SLM) market was valued at USD 720.33 million in 2024 and is projected to grow at a CAGR of around 28.9% during the forecast period, driven by increasing demand for efficient and scalable AI solutions across industries.

- Key market drivers include the growing adoption of edge AI, rising data privacy concerns, and the cost efficiency of deploying compact models for real-time applications.

- Emerging trends highlight the shift toward domain-specific and multilingual SLMs, along with advancements in model compression, quantization, and on-device optimization.

- The competitive landscape features major players such as Meta, Microsoft, Google, Amazon AWS AI, IBM Watson AI, Nvidia, Apple AI, Cohere, Databricks, and Cerebras Systems, focusing on innovation and strategic collaborations.

- North America leads with approximately 48% market share, followed by Europe (24%) and Asia Pacific (18%), while the machine learning-based segment holds the dominant share by technology type.

Market Segmentation Analysis:

By Technology

In the technology segmentation, the machine learning-based sub-segment stands out as dominant, commanding approximately 55.1% of market revenue. This prominence is driven by its relative ease of implementation, lower computational demands compared to deep learning, and broad applicability across tasks such as classification, summarisation and translation. Meanwhile, deep learning-based systems are projected to grow rapidly as architectures become more efficient and capable of handling complex language patterns.

- For instance, Microsoft’s Phi-3-mini model operates with just 3.8 billion parameters while delivering instruction-tuned performance on benchmarks for language understanding, math and code reasoning.

By Deployment

Within the deployment segmentation, the cloud sub-segment emerged as the dominant deployment mode, capturing around 44.8% of global revenue. The growth here is propelled by scalable infrastructure, rapid time-to-market, and integration with existing cloud services, which reduce upfront investment in physical hardware. On-premises and hybrid deployments are gaining traction, especially where data privacy, latency or regulatory constraints make enterprises prefer local or mixed arrangements.

- For instance, AWS’s Graviton3 processors, built on the Arm Neoverse platform, are specifically optimized for machine learning (ML) workloads. They feature bfloat16 support and wider Single Instruction Multiple Data (SIMD) vector units, delivering up to 3 times the ML performance of Graviton2 instances.

By Application

In the application segmentation, consumer applications such as virtual assistants, chatbots and personalised content generation hold the largest market share in recent years. This dominance arises from high user demand, the relatively lower technical barrier for deployment and broad appeal across devices and platforms. Meanwhile, vertical-specific applications in healthcare, finance, retail and legal are gaining momentum driven by the need for domain-specific language understanding, regulatory compliance and improved customer engagement.

- For instance, Meta Platforms’s “Meta AI” reached 1 billion monthly active users across its apps by mid-2025, showcasing the scale of mainstream consumer engagement.

Key Growth Drivers

Edge deployment, latency reduction and data privacy

Enterprises and device-makers increasingly adopt small language models (SLMs) to enable real-time, on-device language capabilities that reduce latency and preserve data locality. SLMs deliver practical inference on edge CPUs, mobile SoCs and constrained servers, allowing applications (virtual assistants, customer kiosks, and industrial agents) to operate offline or with intermittent connectivity while keeping sensitive data on-premises. This demand is propelled by tighter privacy regulations and enterprise risk policies that favor data minimization and local processing over routing text to third-party cloud APIs. Advances in efficient architectures and hardware optimization further lower the barrier to edge deployment, accelerating integration across consumer electronics and regulated verticals.

- For instance, NVIDIA is actively developing and promoting small language models (SLMs) specifically for edge and on-device deployment. In August 2024, NVIDIA introduced its first on-device SLM, Nemotron-4 4B Instruct, designed for low memory usage and quick response times on devices like the Jetso.

Cost efficiency, scalability and enterprise economics

Cost and compute efficiency drive SLM adoption where enterprises require scalable, predictable AI without the expense of giant models. Small models reduce infrastructure, inference and monitoring costs while enabling horizontal rollout across thousands of endpoints — an important economic advantage for mid-sized firms and cost-sensitive SaaS providers. Providers and cloud vendors have introduced compact “mini” variants to address price-performance gaps, encouraging migration from expensive large models for many routine tasks such as summarization, extraction and conversational interfaces. Combined with rising availability of managed deployment frameworks and model catalogs, the lower TCO and faster time-to-value position SLMs as a pragmatic choice for production systems.

- For instance, Nvidia released its Nematron-4-Mini-Hindi-4B model with 4 billion parameters tailored for Hindi language and edge inference workflows, reducing hardware requirements for regional deployments.

Verticalization and regulatory/compliance imperatives

Industry-specific demand and regulatory constraints are driving investments in domain-adapted SLMs. Sectors such as healthcare, finance and legal require models tuned for domain vocabulary, provenance, auditability and explicit privacy controls; SLMs facilitate fine-grained governance because they are easier to validate, log and certify than sprawling LLM stacks. Regulators and corporate compliance functions favor deployable, explainable models that can be hosted on-premises or within approved clouds, prompting vendors to offer domain-specialized SLMs and curated datasets. This verticalization increases purchasing momentum as firms prioritize models that meet both technical requirements and compliance checklists.

- For instance, NVIDIA introduced its BioNeMo platform optimized for life sciences, enabling on-premises fine-tuning of small models for drug discovery under HIPAA compliance frameworks.

Key Trend & Opportunity

Model compression, distillation and efficient architectures

The maturing toolbox for compression pruning, quantization, knowledge distillation and architecture search continues to shrink model footprints while retaining task accuracy, creating a direct opportunity to expand SLM use across embedded and low-power devices. These techniques let developers trade parameter count for operational cost reductions without linear accuracy loss, enabling new product form factors and offline features. Vendors who productize robust compression pipelines and provide pre-compressed, validated models for common tasks stand to gain rapid adoption. The convergence of software optimizations and specialized runtimes opens monetizable opportunities in tooling, benchmarking and certification for compressed models.

- For instance, Qualcomm introduced the AI Model Efficiency Toolkit (AIMET), which supports pruning and quantization workflows validated on over 40 edge devices, enabling real-time performance in Snapdragon platforms.

Domain adaptation and localized language capabilities

Demand for regionally and industry-specific language support is creating a market for compact, localized SLMs that handle vernaculars, dialects and professional jargon with limited compute. Regional models (e.g., language models optimized for Hindi and other non-English languages) and vertical fine-tuning enable more relevant outputs and higher user acceptance in emerging markets. This presents an opportunity for vendors to partner with local platforms, telcos and OEMs to deploy lightweight models tailored for language coverage, regulatory needs and cultural nuance driving both revenue and market penetration outside major Anglophone markets.

Tooling, open ecosystems and democratization of AI

The rise of open-model releases, community toolchains and cloud model catalogs is democratizing access to production-ready SLMs and associated MLOps capabilities. As vendors publish mini variants and toolkits for deployment and monitoring, smaller teams can deliver conversational features and automation without heavy research investments. This democratization creates business opportunities for middleware providers (model registries, optimized runtimes, privacy wrappers) and for consultancies that help enterprises adapt SLMs into regulated workflows. Firms that bundle governance, monitoring and retraining pathways with SLM offerings will capture a growing portion of adoption that values turnkey reliability.

Key Challenge

Accuracy, hallucination and bias in constrained models

Despite efficiency gains, SLMs face pronounced risks around factual accuracy, hallucination and biased outputs when operating with reduced parameter budgets or narrow training data. Constrained models often trade generalization capacity for compactness, which can amplify errors on edge or niche tasks and complicate trust in automated decisions. For regulated domains, even rare hallucinations can have material consequences; thus organizations must invest in verification layers, retrieval-augmented pipelines, and post-inference checks. Developing robust evaluation metrics, domain-specific validation sets and mitigation processes increases deployment complexity and cost, slowing adoption where reliability is mandatory.

Fragmentation, interoperability and lifecycle management

The ecosystem for SLMs risks fragmentation across model formats, runtime engines and deployment targets, creating interoperability and maintenance challenges for enterprises that manage mixed fleets of edge and cloud models. Different quantization schemes, inference runtimes and tuning artifacts complicate CI/CD for models and raise operational overhead for versioning, security patching and performance tuning. Moreover, ensuring consistent governance, explainability and audit trails across distributed deployments requires integrated MLOps investments. These friction points increase total cost of ownership and lengthen procurement cycles, particularly for organizations without mature ML engineering practices.

Regional Analysis

North America

North America leads the small language models market with approximately 48% share, driven by concentrated R&D investment, a dense cluster of hyperscalers, and strong enterprise adoption across technology, finance and healthcare verticals. U.S. and Canadian firms prioritize low-latency, privacy-preserving deployments and readily integrate SLMs into production pipelines, accelerating commercial uptake. Robust venture capital, plentiful AI talent and close partnerships between cloud providers and chip makers lower time-to-market for optimized models. These structural advantages sustain leadership while competition intensifies from well-funded APAC players.

Europe

Europe accounts for roughly 24% of the SLM market, where adoption emphasizes data protection, explainability and sectoral compliance. Enterprises in the U.K., Germany, France and the Nordics favor on-premises and hybrid SLM deployments that meet GDPR and industry standards, encouraging vendors to provide audit-friendly, certifiable models. Strong public and private research programs support localized language capabilities and domain adaptation for finance, legal and healthcare. While growth remains steady, regulatory scrutiny shapes procurement cycles and vendor selection, prompting deeper investment in governance, model validation and local partnerships.

Asia Pacific

Asia Pacific holds around 18% of the market but exhibits the fastest compound growth as China, India, Japan and Southeast Asian markets scale SLM adoption across consumer devices, enterprise SaaS and telecom edge use cases. Demand for vernacular language support, lower-cost on-device inference and telco partnerships drives regional customization of compact models. Government initiatives, localized datasets and expanding AI talent pools accelerate commercial deployments, while cloud-to-edge solutions bridge infrastructure gaps. Market entrants focused on multilingual support and hardware-aware optimization find high growth potential across both urban and emerging markets.

Latin America

Latin America comprises approximately 6% of the SLM market, characterized by selective adoption within BFSI, contact centers and mobile consumer services. Brazil, Mexico and Argentina lead regional demand, where cost sensitivity and the need for Portuguese and Spanish vernacular models drive preference for lightweight, deployable solutions. Local system integrators and regional cloud partners enable pilots and rollouts, but limited AI infrastructure and variable regulatory frameworks slow broad enterprise adoption. Opportunities center on affordable edge deployments, multilingual offerings and managed services that reduce integration overhead for mid-market customers.

Middle East & Africa

Middle East & Africa represent about 4% of the SLM market, with adoption concentrated in government services, telecom and oil & gas sectors where privacy, latency and localized Arabic language support matter. Wealthier Gulf states invest in AI hubs and sovereign cloud capabilities, supporting pilots for domain-adapted SLMs, while North African markets focus on multilingual customer-facing applications. Infrastructure variability and skills gaps limit scale, but strategic national AI programs and partnerships with global vendors create a pathway for steady growth and targeted vertical deployments.

Market Segmentations:

By Technology

- Deep Learning Based

- Machine Learning based

- Rule based system

By Deployment

By Application

- Consumer Applications

- Enterprise Applications

- Healthcare

- Finance

- Retail

- Legal

By Geography

- North America

- Europe

- Germany

- France

- U.K.

- Italy

- Spain

- Rest of Europe

- Asia Pacific

- China

- Japan

- India

- South Korea

- South-east Asia

- Rest of Asia Pacific

- Latin America

- Brazil

- Argentina

- Rest of Latin America

- Middle East & Africa

- GCC Countries

- South Africa

- Rest of the Middle East and Africa

Competitive Landscape

The small language models (SLM) market is rapidly evolving, with major technology leaders focusing on efficiency, scalability, and domain-specific intelligence. Google and Microsoft lead the market through compact AI architectures optimized for on-device processing and low-latency applications. Meta and Amazon AWS AI emphasize open-weight and cloud-native models designed to enhance accessibility and developer integration. IBM Watson AI and Databricks strengthen enterprise adoption through model fine-tuning tools and seamless data integration within analytics platforms. Nvidia and Cerebras Systems provide hardware acceleration and AI infrastructure solutions that significantly reduce training time and computational costs for small models. Cohere and Apple AI specialize in privacy-centric and energy-efficient SLMs, enabling secure deployment on mobile and edge devices. Companies are prioritizing multimodal capability, adaptive learning, and cost-efficient inference to meet growing demand across sectors such as finance, healthcare, and customer engagement while maintaining responsible AI standards.

Shape Your Report to Specific Countries or Regions & Enjoy 30% Off!

Key Player Analysis

- Meta

- Databricks

- IBM Watson AI

- Amazon AWS AI

- Cohere

- Nvidia

- Microsoft

- Cerebras Systems

- Apple AI

- Google

Recent Developments

- In April 2024, Microsoft has unveiled ‘Phi-3-mini,’ a lightweight AI model designed to deliver advanced AI capabilities at a reduced cost. This small language model will be accessible through the Microsoft Azure AI Model Catalog, Hugging Face, Ollama, and NVIDIA NIM. Phi-3-mini is the inaugural model in a series of open small language models that Microsoft has introduced.

- In April 2023, Alibaba Cloud, the digital technology and intelligence division of Alibaba Group, has announced the launch of its latest large language model, Tongyi Qianwen. This new AI model will be incorporated across Alibaba’s various businesses to enhance user experiences in the near future. Customers and developers will also be able to access the model to develop customized AI features in a cost-effectively.

Report Coverage

The research report offers an in-depth analysis based on Technology, Deployment, Application and Geography. It details leading market players, providing an overview of their business, product offerings, investments, revenue streams, and key applications. Additionally, the report includes insights into the competitive environment, SWOT analysis, current market trends, as well as the primary drivers and constraints. Furthermore, it discusses various factors that have driven market expansion in recent years. The report also explores market dynamics, regulatory scenarios, and technological advancements that are shaping the industry. It assesses the impact of external factors and global economic changes on market growth. Lastly, it provides strategic recommendations for new entrants and established companies to navigate the complexities of the market.

Future Outlook

- The market will experience rapid expansion as enterprises prioritize efficient and privacy-preserving AI solutions.

- Advancements in model compression and quantization will make SLMs more accessible for edge and mobile devices.

- Cloud providers will integrate optimized SLM services to enable scalable deployment across industries.

- Domain-specific and multilingual models will gain traction to meet regional and sectoral needs.

- Open-source frameworks will accelerate innovation and reduce development barriers for smaller organizations.

- Partnerships between hardware vendors and AI developers will enhance inference performance and energy efficiency.

- Regulatory compliance and explainability will drive adoption in finance, healthcare, and legal sectors.

- The integration of SLMs into enterprise workflows will boost automation and decision-support systems.

- Continuous improvements in training data quality and bias mitigation will strengthen model reliability.

- Competitive pricing and flexible deployment models will expand market penetration across emerging economies